Insights to Guide Smarter Decisions

Explore how industry leaders are harnessing temporal graph technology to unlock new opportunities. From thought-provoking blogs to real-world case studies and whitepapers, our business resources offer the strategic perspective you need to stay ahead in a data-driven world.

Why delivery optimisation is making transformation worse

The Missing Link for AI Agents: Why a Native Temporal Graph is Non-Negotiable

Prisoners Dilemma and advanced Graph analytics

Are Temporal Graphs Relevant to You?

The Graph Model vs. The Relational Model

The Alan Turing Institue: The Dizzy Potential of Dynamic Graphs

The hidden failures of transformation

Holistic Network Analysis Over Time

Reduce False Positives

High-Performance Investigation at Scale

Advanced Behavioural Sequencing

Unified Temporal Engine for AI

True Semantic Understanding Across Time

High-Performance Context at Scale

Evolving Context Beyond Static Vectors

Dynamic Risk Scoring

Unmasking Collusion & UBOs

Agentic AI Framework Support

.png)

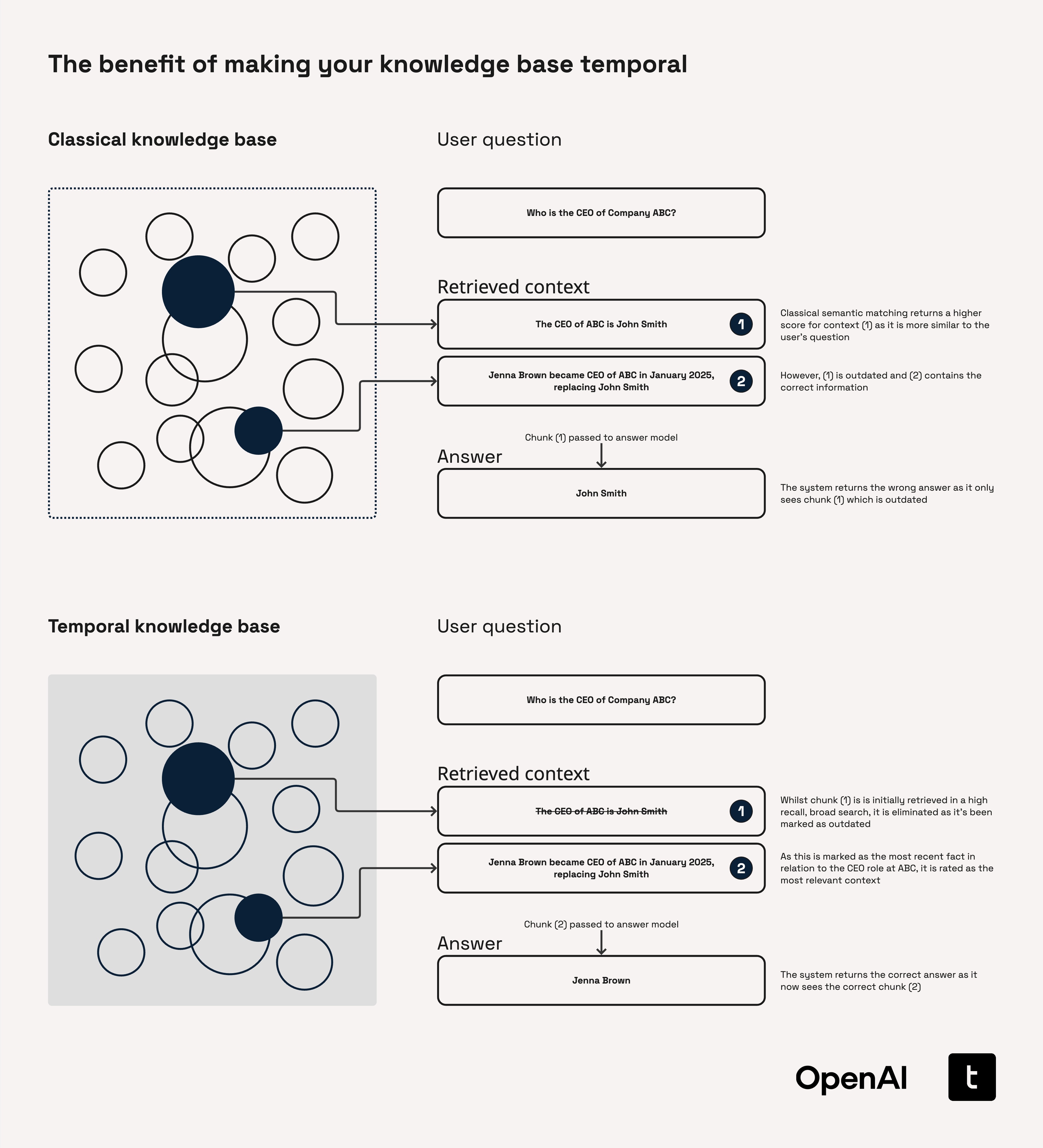

Advanced GraphRAG with Temporal Depth

.png)

Pometry Wins 2025 Swiss Cyber Startup Challenge

Pometry Appoints Edward Sherrington as Chief Product Officer

Raphtory v0.16.0: Core Refinements, Metadata, and Major Performance Boosts

Syntheticr.ai Supercharges AML Data Demos with Pometry's Temporal Graph Insights

Raphtory v0.15.0: Perspectives, Powerful Aggregations, and Parquet IO

Pometry Wins Prestigious BIS Innovation Hub 2025 Analytics Challenge

Raphtory v0.14.0: Faster Queries, Powerful Filtering, and New Algorithms

Raphtory v0.13.0: Interactive UI (Alpha), Python Ergonomics, and More!

Raphtory v0.12.0: Vector APIs, Edge Filtering, and a New On-Disk Format

Pometry Appoints Renaud Lambiotte as Scientific Advisor

Pometry Joins LDBC Task Force To Build Next Generation of Financial Graph Benchmarks

Pometry Joins LDBC Board of Directors

Pometry's ability to ingest from and write to any data source, its small footprint allowing for flexible deployment (including ephemeral use), and its design philosophy of not needing to be the "centre of the universe" mean you can integrate it strategically without being forced into a proprietary ecosystem for all your data needs.

Pometry is architected from the ground up to treat time as a fundamental aspect of data. Every piece of data, every relationship, and every property is understood within its temporal context, allowing for efficient queries and analytics that track evolution and change as first-class citizens, rather than an afterthought.

Python APIs offer ease of use, rapid development, and seamless integration with the rich Python data science ecosystem. The high-performance Rust APIs are for advanced users who need to extract every ounce of performance, work directly with data buffers, or build custom, ultra-low-latency extensions using lock-free mechanisms. The high-level Rust and Python APIs hold a 1:1 parity, with the HPC Rust APIs being slightly more complex tailored for advanced users.

Pometry is designed to be open. It can ingest data from virtually any source (CSVs, Parquet, Kafka, SQL databases, data lakes like S3/Azure Blob) and write results and enriched data back out to any system. It doesn't require you to centralise all your data within it.

Through a combination of highly optimised Rust code, advanced techniques like lock-free data structures, vectorised execution (leveraging SIMD/AVX512), efficient memory management, and an architecture designed specifically for graph workloads. This allows us to maximise the utility of modern CPUs without requiring massive clusters for many demanding tasks.

The small footprint enables incredible deployment flexibility: run Pometry on edge devices, embed it directly within your Python ML pipelines for zero-latency analytics, deploy in resource-constrained environments, or use it as a lightweight, powerful server. It simplifies distribution and reduces overhead.

Pometry is extremely efficient where others are not. We employ advanced compression techniques that significantly reduce the storage footprint of your data, temporal or static. We’ve demonstrated compressing 4TB of raw CSV data down to just 350GB in our optimized graph format. With our highly efficient on-fly decompression, we ensure you are able to save on storage cost without performance impact on analytics. This means you can handle very large datasets without necessarily needing massive storage infrastructure, contributing to lower costs and better performance on commodity hardware.