The Missing Link for AI Agents: Why a Native Temporal Graph is Non-Negotiable

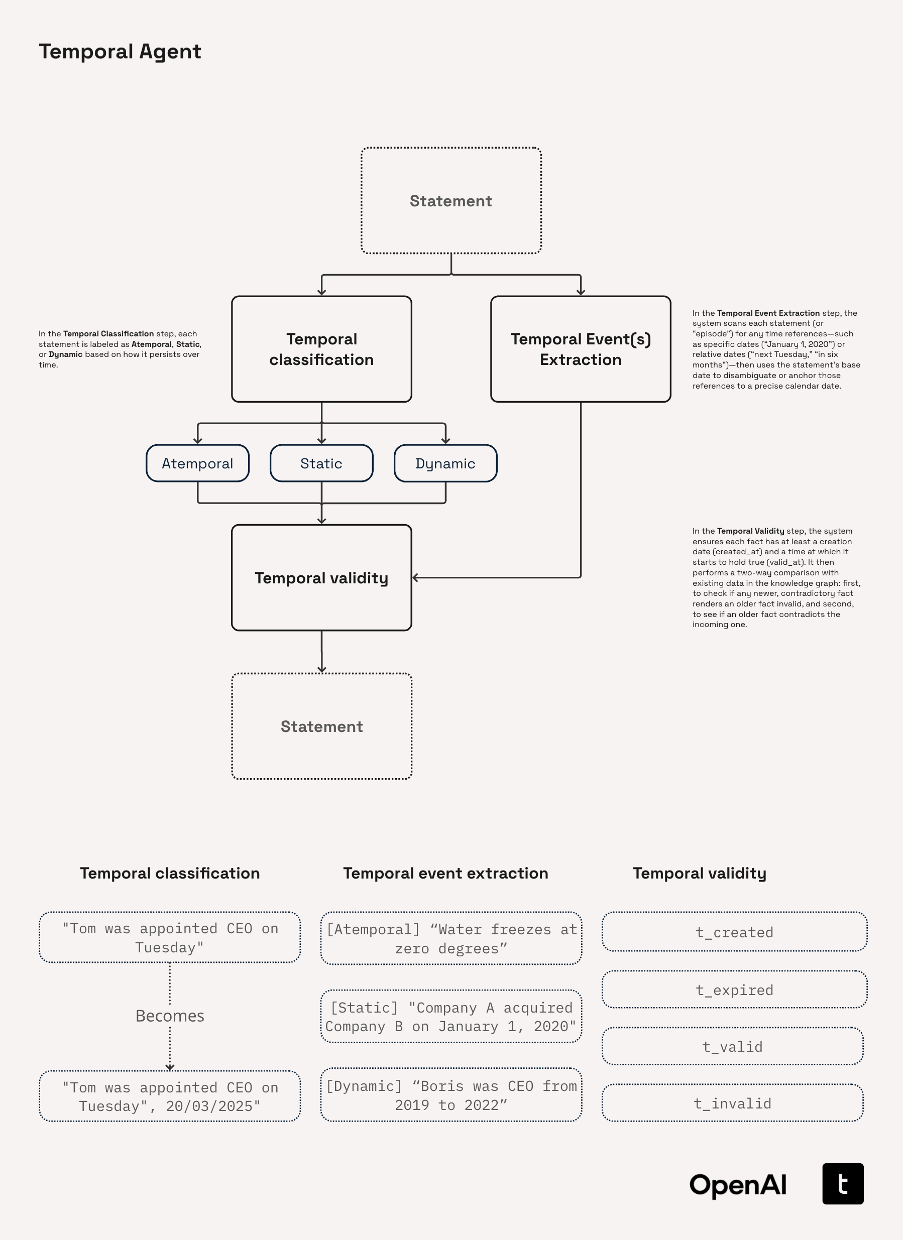

The recent OpenAI Cookbook on “Temporal Agents with Knowledge Graphs” has provided a brilliant blueprint for the next generation of AI: agents that don’t just answer questions, but reason over time, understand evolving contexts, and maintain a persistent, accurate memory. The cookbook perfectly outlines the what and the why – and the need to systematically update and validate a knowledge base, perform multi-hop retrieval, and resolve temporal conflicts.

But this raises a critical question: what is the ideal how? To record knowledge over time, you need underlying engine powerful and flexible enough to make this vision a reality, not just in a notebook.

The answer is a native temporal graph. And it’s not just a “nice to have”; it’s the fundamental architectural choice that separates a truly temporal agent from a static one with a clock.

At Pometry, we’ve been obsessed with this problem. We built our platform from the ground up because we saw this massive intelligence gap: traditional databases miss relationships, and conventional graph databases are blind to change. These are precisely the challenges the OpenAI Cookbook confronts. Below we explore why a native temporal graph like Pometry is the perfect fit to power the agents described.

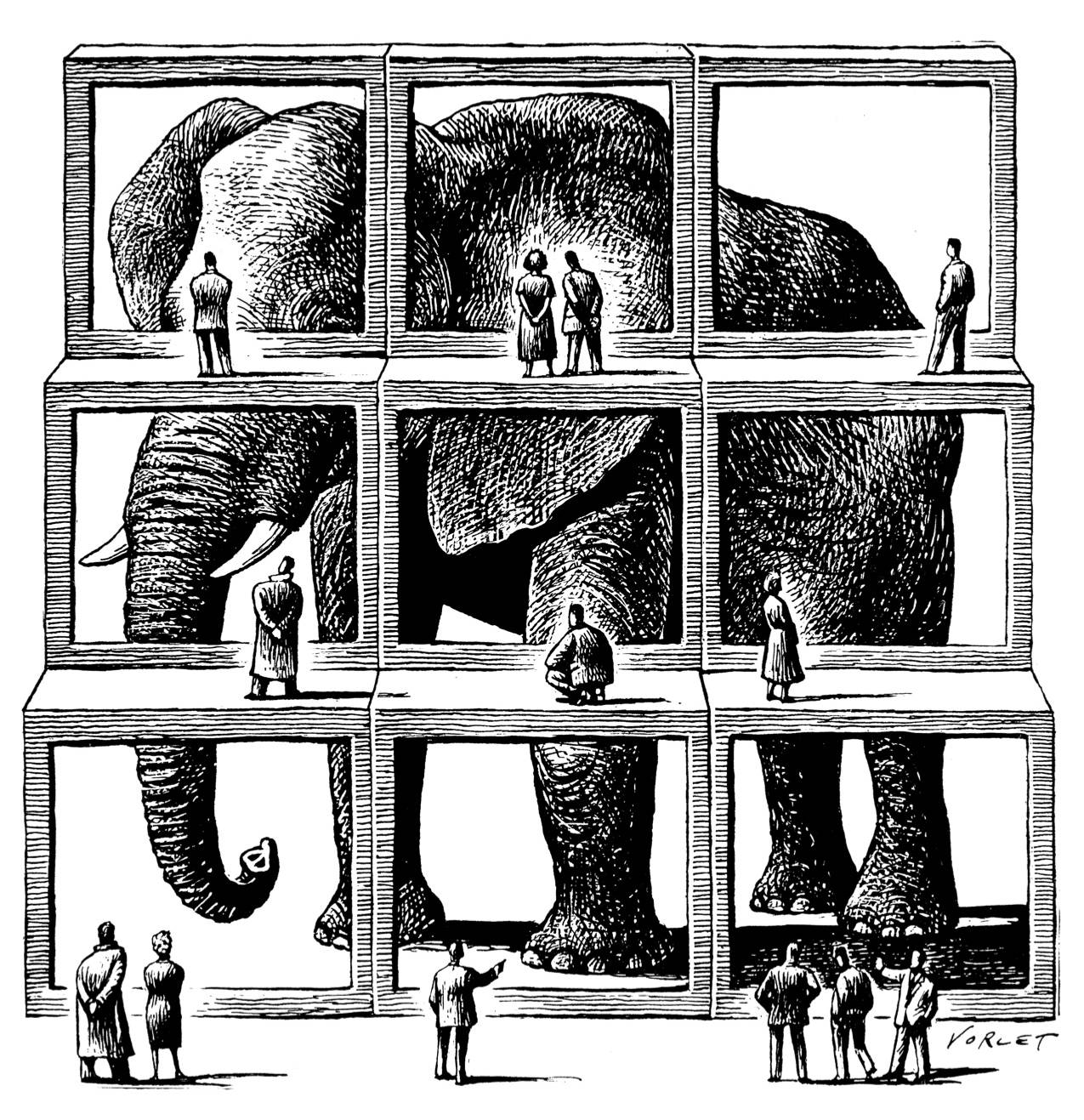

Beyond Snapshots: Thinking in Time, Natively

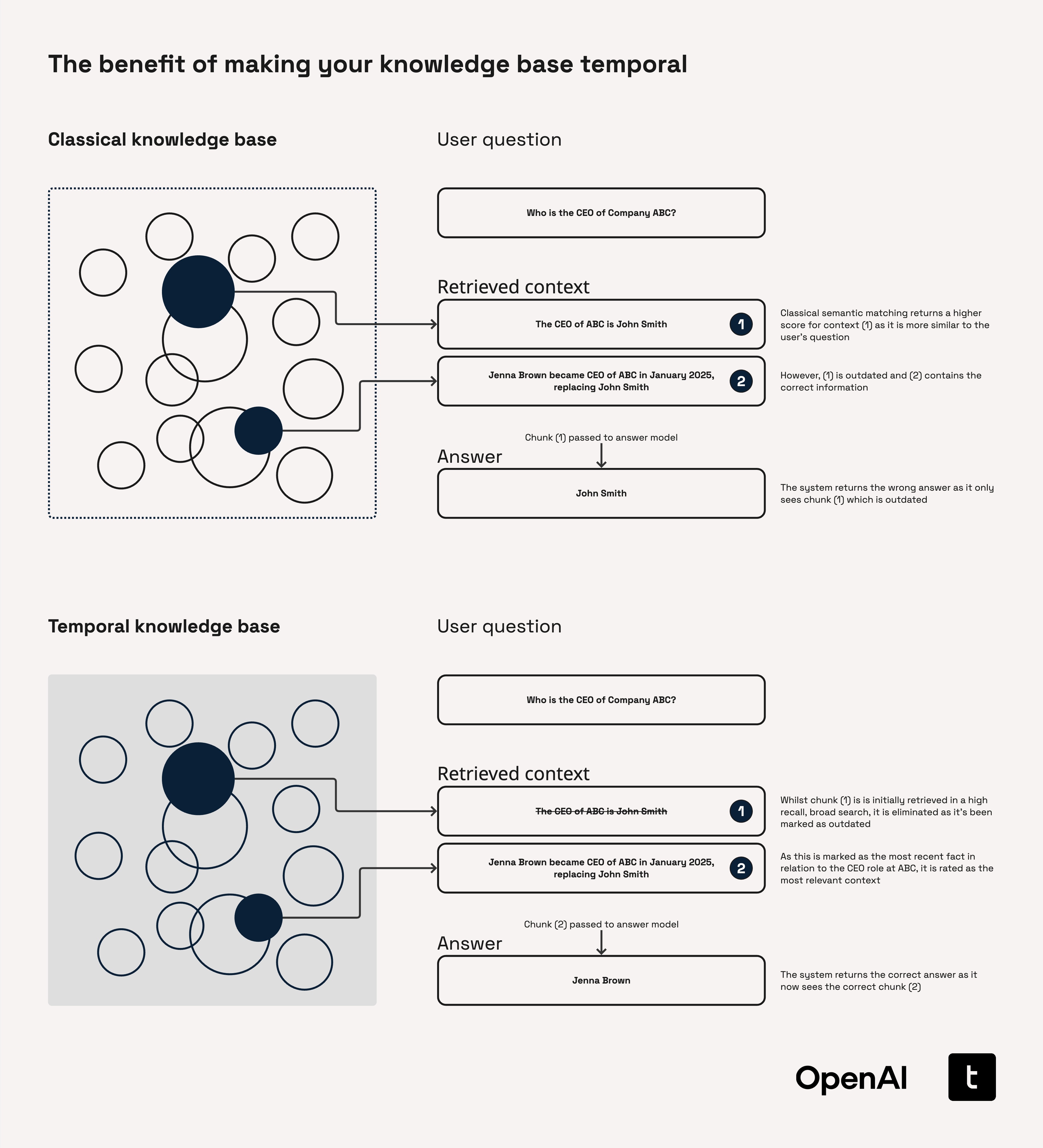

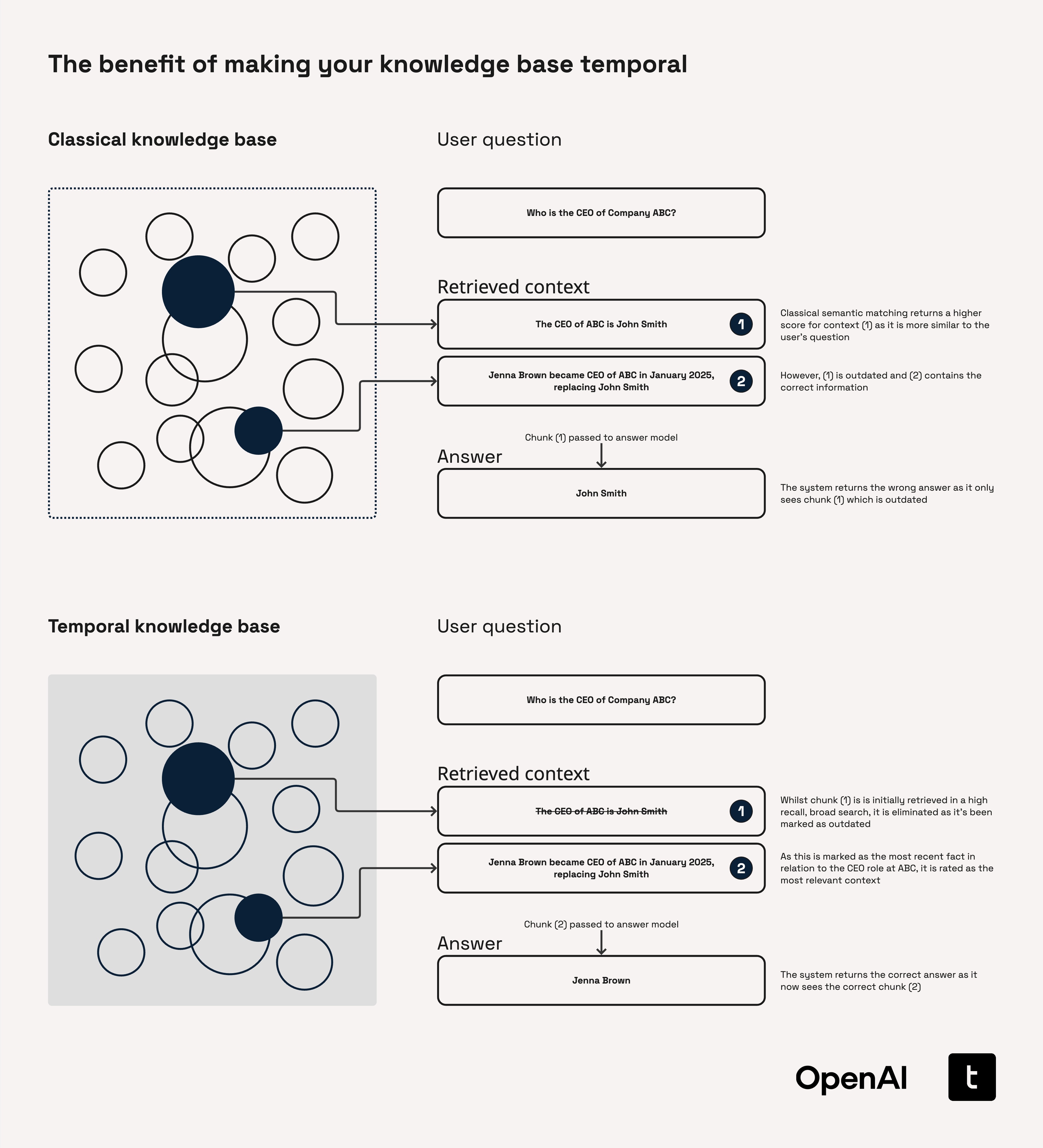

A key challenge highlighted in the cookbook is keeping a knowledge graph “current and relevant” as new data arrives. Conventional graph databases (and the notebook examples using libraries like NetworkX) often handle this by taking static snapshots of the graph at different points in time. This approach is cumbersome, inefficient, and fails at scale.

A native temporal graph architecture is fundamentally different. Instead of storing disconnected states, Pometry models change itself as a first-class citizen. This means you can ask questions that are impossible for other systems, such as:

- “Show me all entities whose centrality score increased by more than 50% in the last month.”

- “Find all sequences of events where a new account was created, received funds from a high-risk entity, and then sent those funds to more than five new accounts within a 24-hour window.”

This is the level of reasoning required by the cookbook’s “Invalidation Agent” and “Temporal Agent,” which need to resolve conflicts and understand the sequence and validity of events. With Pometry, this isn’t a complex, multi-step workaround; it’s a direct query without risk for failure.

Performance at Scale: From Laptop to Supercomputer

The cookbook rightly points out that the effectiveness of retrieval algorithms is limited by the “quality and freshness” of the database. But it’s also limited by speed. An agent that takes hours to reason over a large dataset is not practical.

Pometry’s core architecture was designed for extreme efficiency. This isn’t about brute force; it’s about deep algorithmic innovation, developed through partnerships with organisations like Los Alamos National Labs.

- Ludicrous Speed: We’ve been proven to be 200x faster than state-of-the-art U.S. Department of Défense graph solutions with a fraction of the hardware.

- Unmatched Scale: We can process terabytes of data on a single laptop – a task that would otherwise require a supercomputer.

For an AI agent, this means it can reason over its entire history, not just a recent subset, without crippling delays. This unlocks a deeper, more accurate understanding of long-term trends and subtle, evolving patterns.

A Production-Ready Python Experience

For technical users, it’s clear the cookbook opted for NetworkX due to its ease of use and seamless integration into a Python environment. It’s simple, effective for in-memory analysis, and gets the point across without operational overhead. Our platform is designed to be a production-grade, drop-in replacement for other graph databases as well as NetworkX. Pometry’s engine can be embedded directly within your Python and data science processes, exposing graph-native operations and over 50 graph algorithms directly through a Python API while scaling to billions of relationships on a mere laptop. This completely removes the need for an intermediary query language like Cypher or Gremlin, allowing developers to stay in their native environment while benefiting from a high-performance, persistent, and scalable temporal graph backend.

AI with Agency: The GraphRAG Native LLM

Pometry is more than just a database; it’s an intelligence platform with a GraphRAG Native LLM integration that can reason over your dynamic, evolving data. This directly aligns with the cookbook’s vision of multi-step retrieval and using LLMs to traverse a graph. Our AI has true agency over the temporal graph, a capability only possible because of our native temporal foundation.

Furthermore, our unrivalled developer flexibility, with a tiny 10MB binary that can be embedded directly within a Python process, makes integrating this power into an agent’s workflow seamless.

Closing the Loop: From Insight to Actionable Alerts

The ultimate goal of a temporal agent is to act. The cookbook describes building a pipeline that extracts entities and relations to keep a graph up to date. The Pometry Alerts Engine is the operational realisation of this concept.

Our engine allows users to define sophisticated, multi-faceted alerts based on temporal queries, graph algorithms, or even external ML model outputs. These aren’t just logs; alerts can materialise findings and their contextual subgraphs directly into the UI for immediate investigation. This turns passive insights into active, automated foresight – a core tenet of proactive intelligence.

The Future is Temporal

The OpenAI Cookbook provides a powerful blueprint for the future of AI agents. But a blueprint needs the right materials to build something strong and scalable. By combining a native temporal architecture, extreme performance, and an integrated AI reasoning layer, Pometry provides the essential foundation to move temporal agents from the cookbook to the real world.

You might also like

Discover insights and tools for data analysis.

The hidden failures of transformation

Why delivery optimisation is making transformation worse

Holistic Network Analysis Over Time

Unlock Your

Data's Potential

Discover how our tool transforms your data analysis with a personalized demo or consultation.